This week I had to pursue my idea and develop the details. As we discussed, my goal for next class was to think and draw some plants in order to add some complexity and personality to the 3D moving rose.

Then I tried to model a plant in 3D. These days I'm learning how to use Rhino for the Prototyping Process class, so I tried to model it with this software. I was WRONG !

- First, it's not very straight forward to create organic surface and,

- Second if you want to use your object in a 3d animation sofware like C4D to make it look more organic, the conversion from NURBS to polygon mess everything up !

I will never do that again and start my modeling in C4D all over.

Here are some shots of what I began to create with Rhino :

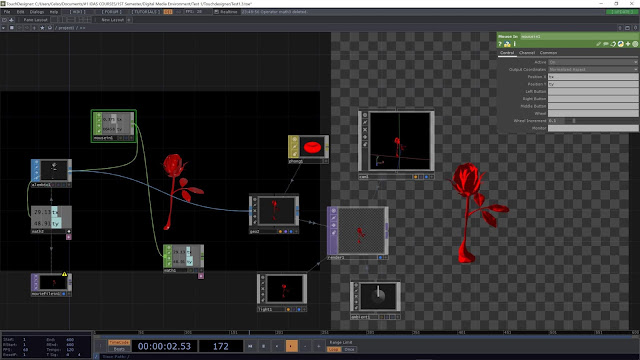

After that I worked on some improvement for my Depth Display TouchDesigner project. This display works great with long shapes but when I added my 3D "breathing" rose alone in the composition, the perspective illusion wasn't strong. I was sad but I could have predicted it. I hoped I could get back this illusion by positioning many roses everywhere in the range of the 3D camera. But in fact I don't think it looks as good as long shapes.

This was a big question for me, I started reviewing my entire project idea.

Finally I came up with the idea of modeling many different plants to create a natural 3d environment. This environment will be first suited for this kind of display and second I thought I can use this environment on many different mediums. I've got the idea to make a video of a 3D tracking of this environment in the city and I can also create an AR application. And personally, that's a good occasion to build a personal visuals stock that I can use for VJing or any other videos.

The AR application shouldn't be a problem because I've already made something similar. When I though about that I suddenly realized that Unity may actually be the easiest way also for creating the installation of this work. I can also make a depth display and connect Arduino to Unity I guess. And obviously, it would be much more confortable for Unity to work with 3D than TouchDesigner since it's a game engine.

I have to think about it.

Here are some screen shots of my TouchDesigner Depth Display tests :

Next week, I will receive the gaz sensor for Arduino, so I'll be able to see if my breathing idea works well or not.